1. Static Analysis and Software Security – Importance and Challenges

A software vulnerability is defined as a weakness in the specification, development, or configuration of software such that its exploitation can violate a security policy. The exploitation of a single vulnerability can have far-reaching consequences both for the user and for the owning enterprise of the compromised software, including financial losses and reputation damages. For instance, Equifax Breach (CVE-2017-56381) allowed criminals to expose the personal data of more than 143 million Equifax customers, leading to a total cost of $1.35 billion according to the company’s financial results of the first quarter of 2019. In fact, critical security breaches, including Equifax Breach and Heartbleed (CVE-2014-0160) were caused by flaws that resided in the source code and could have been avoided if these flaws were detected and eliminated in the first place. Hence, in order to avoid potential damages, software development enterprises are seeking mechanisms able to assist the identification and elimination of vulnerabilities as early in the development cycle as possible.

One such mechanism is static analysis, which is a white-box testing technique that searches for potential software bugs (including vulnerabilities) in software products by analyzing their source code without requiring its execution. Automatic static analysis (ASA) is considered an important technique for adding security during the software development process. This belief is expressed by several experts in the field of software security (e.g., Gary McGraw, Brian Chess, and Michael Howard), while almost all the well-established secure software development lifecycles (SDLCs), including the well-known Microsoft’s SDL[1], OWASP’s OpenSAAM[2], and Cigital’s Touchpoints[3], propose the adoption of static analysis as the main mechanism for adding security during the coding phase of the SDLC. In addition, ASA is a security activity commonly adopted by major technology firms like Google, Microsoft, Adobe, and Intel, as reported by the BSIMM[4] initiative.

Despite its effectiveness in detecting security vulnerabilities early enough in the development process, the practicality of ASA is hindered by the fact that it produces a large number of warnings (i.e., alerts) that need to be manually inspected by developers in order to decide which of those require immediate care. More specifically, ASA produces a large number of False Positives, i.e., alerts that do not correspond to actual issues, due to the approximations that ASA is obliged to make in order for the analysis to complete in a reasonable time, whereas even some of the actual issues that are reported by ASA (i.e., True Positives), may not be critical from a security viewpoint. Hence, there is a strong need for more accurate static code analyzers, or mechanisms able to post-process the results of static code analyzers and report those warnings that are more critical from a security viewpoint. Since building a flawless static code analyzer is considered an undecidable problem in the literature, researchers have shifted their focus towards the latter approach.

2. IoTAC Security Alerts Criticality Assessor

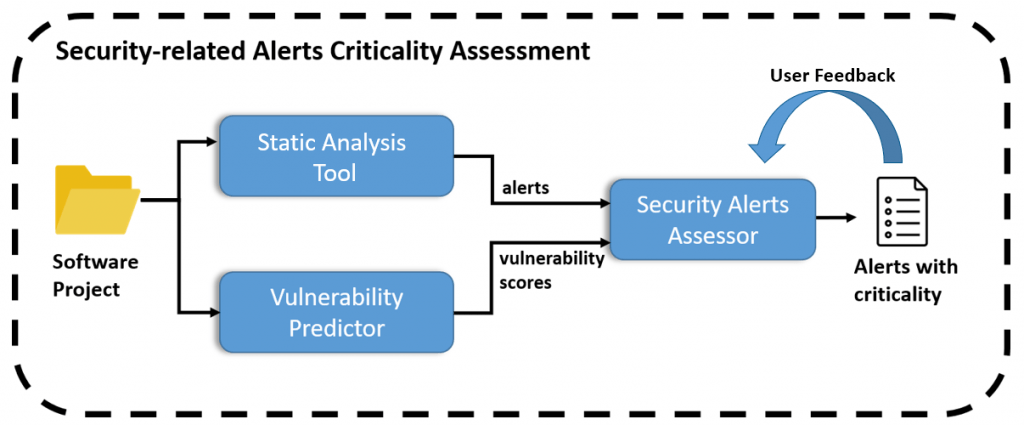

Within the context of the IoTAC project, in an attempt to address the aforementioned problem, we proposed a novel mechanism for assessing the criticality of security-related static analysis alerts. In particular, we developed a self-adaptive technique, the Security Alerts Criticality Assessor (SACA), for classifying and prioritizing security-related static analysis alerts based on their criticality, by considering information retrieved from (i) the alerts themselves, (ii) vulnerability prediction, and (iii) user feedback. The proposed technique is based on machine learning models, particularly on neural networks, which were built using data retrieved from static analysis reports of real-world software applications. The high-level overview of the tool is presented in the following Figure.

Figure 1: The high-level overview of the Security Alerts Criticality Assessment (SACA) mechanism

As can be seen in the figure above, a software project is provided as input to the system. Initially, security-specific static analysis is employed in order to retrieve the static analysis alerts that are relevant to the selected project. In addition to this, vulnerability prediction models are employed in order to compute the vulnerability scores of its software components, which reflect the likelihood of each component to contain vulnerabilities. Then, the alerts along with the vulnerability scores are provided as input to the Security Alerts Assessor (SAA), which is a neural network that assesses how critical each one of the alerts is from a security viewpoint. More specifically, for each one of the received alerts it reports (i) a criticality flag (i.e., a binary value between 0 and 1, with 1 denoting that the corresponding alert is considered critical and with 0 denoting that the alert is considered non-critical), and (ii) a criticality score (i.e., a continuous value in the [0,1] interval denoting how likely it is for the alert to be critical from a security viewpoint). Hence, the developer can filter out those alerts that are not marked as critical by the model, or rank the alerts based on their criticality score, starting by fixing those alerts that are more likely to correspond to critical vulnerabilities first, increasing, in that way, the probability of detecting and mitigating actual vulnerabilities.

As illustrated in the figure above, the user can also correct the outputs of the model, and the SAA can be retrained (on demand) based on the received user feedback. In that way, the SAA adapts to the specific characteristics of the software product to which it is applied and provides more accurate assessments for the selected product. This is important since the criticality of specific alerts (security weaknesses) also depends on the type of the product to which they belong. For instance, cross-site scripting (XSS) weaknesses may be important for web applications, but irrelevant for offline applications. Hence, the self-adaptiveness of the proposed approach enables it to fit the assessments to the needs of the corresponding software project to which it is applied.

The Security-related Alerts Assessor (SAA) is built as part of the Security Assurance Module of the Software Security-by-Design (SSD) Platform of the IoTAC Project. More information about the SAA, including information about the construction of the machine learning models and the evaluation results, can be found in a recent publication that is available here. More information about the SSD Platform can be found in our previous post.

[1] https://www.microsoft.com/en-us/securityengineering/sdl

[2] https://owasp.org/www-project-samm/

[3] http://www.swsec.com/resources/touchpoints/

[4] https://www.bsimm.com/